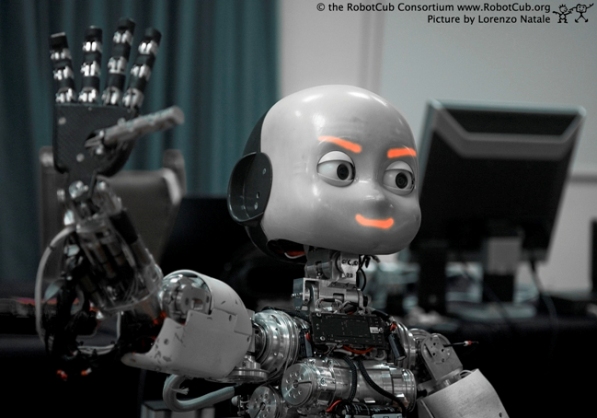

CATEGORY: Humanoid

MADE BY: European Commission through its Cognitive Systems and Robotics Unit.

INTRODUCTION:

RobotCub is an open source humanoid robot project funded by European Commission through its Cognitive Systems and Robotics Unit. The project started in september 2004 with a total duration of 60 months. There are ten research centers in Europe, three in USA and three in Japan is contributing to the project. The RobotCub project brings experts from different fields such as robotics, neuroscience and developmental psychology and the project is led by University of Genoa and the Italian Institute of Technology. The total robot count that serves at different research centers is targeted to be 20 by spring 2010.

The goals of the RobotCub project are to create an advanced humanoid robot with cognitive skills as well as understanding our knowledge of cognition itself, through utilizing the robots resulting behaviors. The iCub robot has sophisticated motor skills and several sources of sensory information, such as vision, sound, touch, proprioception (sense of relative position of body parts) and vestibular (balance and spatial orientation). The main idea behind having sophisticated sensors including the last two, which very few other robots possess in the world, is that the development of intelligence and mental processes are all interrelated with our body’s sensors and the body itself. Skillful object manipulation, interaction with the environment and other humans for example, all play key roles in learning the world for infants where they develop mental abilities of a child, and RobotCub follows the same principles. The robot can crawl on all fours and sit up. Its hands allow dexterous manipulation and its head and eyes are fully articulated. It has visual, vestibular, auditory, and haptic sensory capabilities. Apart from researchers, a user community is also contributing to the development of the robot.

ASKING THE BUILDER:

Below are some questions we asked to Dr. Giorgio Metta, who is the main responsible person about the technical aspects of the project. Dr. Metta is Assistant Professor at the University of Genoa and he was very kind to answer our questions.

RoboticMagazine: Since the start of the project, can you briefly summarize the advancements of AI or successful achievements through this project? For instance, through the research on I-Cub, what specific examples can you give us that the robot learned or gained some abilities that required autonomous intelligent reasoning? In comparison to start date of the project, what new abilities does the robot have in that sense?

Dr. Metta:There was no robot at the beginning of the project. I think the iCub is changing the way of doing robotics in many senses since it forces researchers to deliver (through the open source license) software that can be reused by others. This is the first time roboticists can do it, typically, because of the differences from one platform to another, experiments are run only once. Here we are developing a more scientific approach to robotics & embodied AI where experiments can be replicated multiple times and where we can build upon the work of others. Specifically in the RobotCub project we have prepared a roadmap of human development and a collection of experiments that formalize how to test a robot similarly to what developmental psychologists would expect from a developing cognitive agent.

RoboticMagazine: For humans, learning through interaction with the environment through body sensors, manipulating objects, and the use of the body, which develops all the mental processes, requires an organ called “brain”. You have your robot follow the same learning principles, but the current technology does not have the means to replicate the human brain artificially – yet. Without the use of an advanced learning computer like the human brain, can you summarize how do you approach to this problem as far as reflecting all these learning and reasoning principles in the software?

Dr. Metta: There’s a body of techniques called generically “machine learning” that allow building software that can learn from mistakes or from the structure of the interaction with the environment. We can envisage simulating certain brain processes using these techniques although the substrate is very different from that of a biological brain. Parallelism is now common in computers and we have developed software that allows exploiting multiple processors to carry out intensive computations such as sensory processing or learning from large quantities of data.

RoboticMagazine: Nowadays, there are thousands of scientists working on the reverse engineering of the brain and very frequently we read about new findings about it. Is there a current study in your research that incorporates the ever increasing number of findings from the reverse engineering of the brain into the software right now, or is your AI research only focusing on the software? Which approach is followed and why?

Dr. Metta: Indeed, we are keen to this approach, i.e. looking at the brain to derive principles that can be applied to AI and engineering. For this reason, our project (RobotCub) includes neuroscientists and developmental psychologists. One example of this collaborative work can be found in here:

L. Craighero, G. Metta, G. Sandini, L. Fadiga. The Mirror-Neurons System: data and models. In Progress in Brain Research, 164 “From Action to Cognition”. von Hofsten C. & Rosander K. editors. ISBN: 978-0-444-53016-5. Elsevier. 2007.

In this paper we describe how the brain exploits motor information for perceptual tasks and present a computational model that we used in some of our robots.

RoboticMagazine: This is an open source project and many researchers are collaborating from all over the world. Can you briefly describe how does this process work? What are the obstacles? Especially because, in addition to researchers being from different locations, they are also from different areas of expertise such as psychology, cognitive neuroscience, and developmental robotics.

Dr. Metta:The major difficulty is in integrating work done at different locations. The typical researcher works by his/her own agenda and it is difficult to use techniques and methods that allow the work to be easily shared. Having a common platform helps and facilitate this collaboration but does not guarantee success. Sometimes the only solution is to really work together. Again, the advantage of a common platform means that our researchers can travel from one location to another and transfer their expertise. This has been so far the most effective way of collaborating. The different areas of expertise are less of a challenge since people are used to discuss and present ideas and we have a long tradition of multidisciplinary collaborations.

HERE ARE SOME STATS FOR iCUB ROBOT:

Control and Software: Runs on a set of DSP controller cards (embedded) and on a middleware software called Yarp (http://yarp0.sourceforge.net)

Vision: Two firewire cameras (PointGrey, Dragonfly 2)

Sound and voice processing: Two standard microphones mounted on the ears. Voice processing is based on various open packages such as Esmeralda (also on Sourceforge) and Sphinx.

Height: 104 cm

Weight: 22kg

D.O.F: 53 (mainly distributed at the upper part of the body)

Body Frame: Aluminum, steel, carbon fiber, plastic

Project duration: 5 years

Start date: September 2004

Number of motors: 53

Servo power: >100W for the big motors, in the mW range for the eyes and fingers

Power source: 220V/110V AC, approx 2kW power supplies

External sensors and types: Cameras, microphones, joint position sensors, inertial sensors and under development skin and touch (http://www.roboskin.eu)

Planned upgrades: Force control at the joint level, sensorized skin, bipedal walking

All photos are credited to robotcub.org

LINKS:

http://eris.liralab.it/wiki/Manual

http://eris.liralab.it/iCub/dox/html/index.html

http://robotcub.svn.sf.net/viewvc/robotcub/trunk/iCubPlatform/hardware/

You must be logged in to post a comment Login